AI Regulations to Watch in 2026: A Complete and Plain-Language Guide to Europe’s Changing AI Rules

Artificial intelligence (AI) is no longer just a topic in news headlines or technology conferences. Today, AI is integrated into many systems that we rely on daily, influencing work, finance, healthcare, and public services in ways most people may not even notice. How will AI regulations in Europe affect these systems in 2026? From automated hiring tools and credit approvals to hospital software and AI-powered financial systems, AI quietly shapes decisions that directly impact people’s careers, finances, and overall well-being.

Because of this growing influence, governments across the world are trying to create rules that control how AI is built and used. The challenge is not simple. Too few rules can put people at risk. Too many rules can slow down progress and innovation.

The European Union has taken one of the strongest positions globally when it comes to regulating artificial intelligence. But as the EU moves closer to 2026, its approach is shifting. Several major AI and digital laws may be delayed, softened, or rewritten entirely.

Here we explain how AI rules are changing in Europe, why those changes are happening, which laws are involved, who supports or opposes them, and why they matter. Let’s dive in.

What Are the Reasons for Regulating Artificial Intelligence?

Artificial intelligence systems are designed to process enormous amounts of information and make decisions at speeds far exceeding human capabilities. This ability is extremely valuable, allowing businesses, governments, and individuals to work efficiently, make informed decisions, and uncover insights that were previously impossible to detect. However, AI can also pose significant risks when poorly designed, trained on biased data, or used without proper oversight, monitoring, and ethical governance.

Which AI systems are considered high-risk under EU regulations, and why does it matter? AI is already integrated into many sectors that directly affect people’s lives. Examples of AI risks by sector include:

Finance: AI in banking enables real-time credit scoring, fraud detection, and risk assessment. Biased algorithms or errors in data can unjustly deny loans or inflate risk scores. A 2024 EU financial oversight study found that 17% of automated credit decisions reviewed showed unexplained disparities impacting marginalized groups.

Government Services: AI in public administration can optimize social services, immigration processing, and policing. Without transparency, citizens may not understand how decisions are made or why outcomes differ across cases.

Healthcare: AI assists in diagnostics, treatment planning, and predictive medicine. Errors or poorly validated models can have serious consequences for patient safety and treatment outcomes. A 2023 audit of AI radiology tools revealed that 12% of automated diagnoses required human correction due to misclassification.

Hiring and Recruitment: AI-powered recruitment tools can screen hundreds of candidates rapidly, but biased training data may favor one demographic over another. A 2023 European Commission report found that 38% of AI-driven recruitment tools could perpetuate gender or ethnic biases if not carefully monitored.

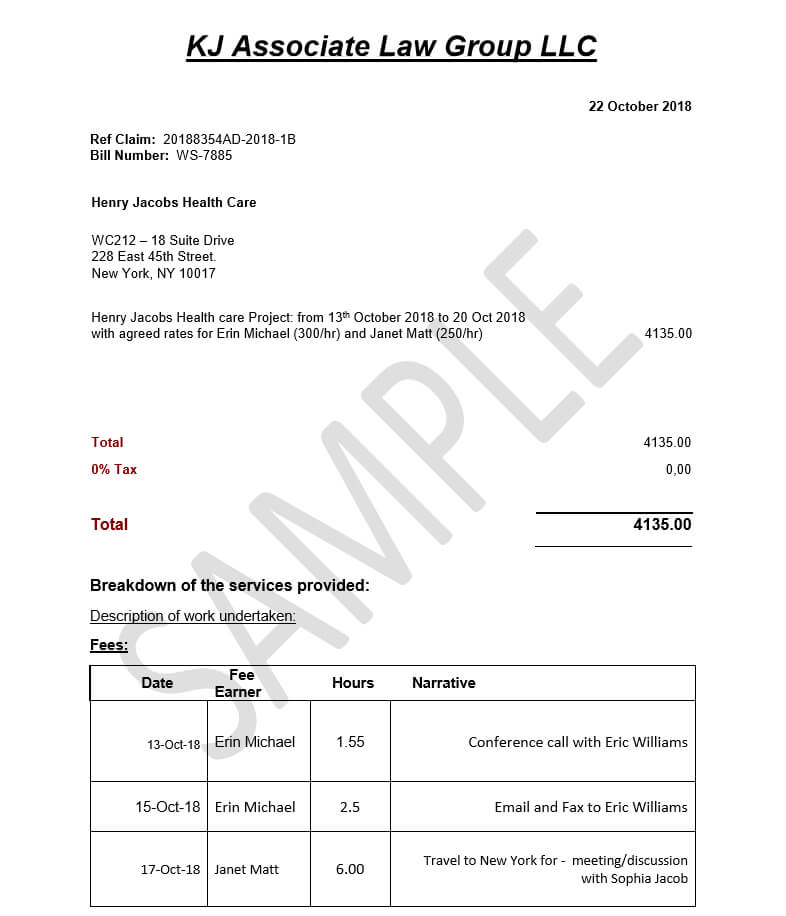

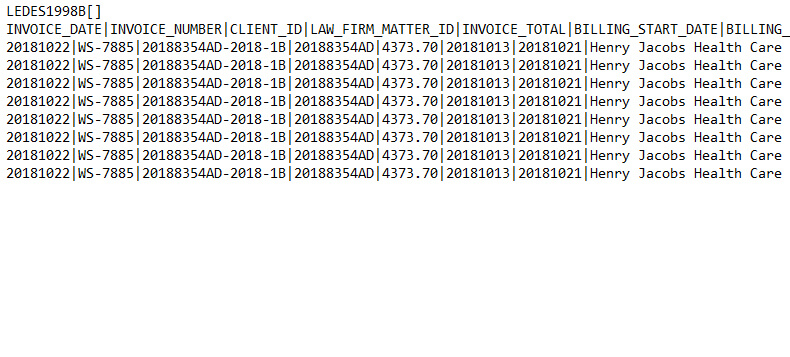

Legal Technology: AI is transforming legal practice by automating contract review, compliance monitoring, billing, and case prediction. Overreliance without oversight can result in flawed advice, noncompliance, or privacy violations. A 2024 survey of 200 EU law firms found that 42% expressed concern that AI tools might introduce legal risks due to unclear accountability mechanisms.

When AI systems make mistakes, the consequences can be severe. Individuals may be denied jobs, loans, or essential services without understanding why, and there may be no clear way to challenge these decisions. Proper regulatory oversight ensures AI operates in a manner that is transparent, accountable, fair, and aligned with societal values, protecting both citizens and businesses.

This is why governments, particularly in Europe, are pushing for AI regulation. The goal is to ensure that AI systems are safe, fair, and accountable, and that society can reap the benefits of AI without falling victim to its risks. Proper regulation aims to prevent harm before problems become widespread, protecting individuals, businesses, and society as a whole.

The EU AI Act: The First Comprehensive Artificial Intelligence Law in the World

The European Union AI Act represents a historic milestone: it is the first legislation anywhere in the world dedicated entirely to artificial intelligence. Unlike traditional software regulations, the EU recognized that AI systems are fundamentally different, with the ability to make decisions that directly influence people’s lives, rights, and opportunities. To address these unique challenges, the EU created a bespoke legal framework tailored specifically for AI, establishing a new standard for global AI governance.

How the EU AI Act Operates

The AI Act adopts a risk-based regulatory approach, meaning that not all AI systems are treated equally. Instead, the rules are calibrated according to the potential risk of harm an AI system may cause. AI technologies are categorized into four tiers:

• Minimal or low-risk AI systems – subject to basic transparency obligations.

• Limited-risk AI systems – requiring clear disclosure to users that AI is involved.

• High-risk AI systems – demanding rigorous compliance measures, including documentation, pre-deployment testing, continuous monitoring, and human oversight.

• Unacceptable-risk AI systems – prohibited due to inherent dangers, such as social scoring or manipulative practices.

This risk-based classification ensures that regulatory oversight is proportionate, safeguarding public safety without unnecessarily stifling innovation.

Defining High-Risk AI Systems

High-risk AI systems are those with the potential to significantly affect people’s fundamental rights, safety, or long-term opportunities. Examples include:

• AI tools used in hiring, recruitment, and employment screening

• Credit scoring and loan approval systems operated by banks

• Medical diagnostics and treatment recommendation AI systems

• AI deployed in government services or public sector decision-making

While high-risk AI systems are permitted under EU law, they are subject to stringent regulatory obligations designed to ensure safety, fairness, transparency, and accountability. These obligations include:

• Comprehensive documentation and audit trails – Developers must maintain detailed records of system design, data sources, decision-making processes, and any modifications, allowing regulators and auditors to review compliance.

• Pre-launch validation and stress testing – Systems must be rigorously tested before deployment to ensure they perform reliably under various scenarios and do not produce biased or unsafe outcomes.

• Ongoing performance monitoring – High-risk AI systems must be continuously monitored in real-world use to detect and correct errors, biases, or unforeseen risks as they arise.

• Active human oversight – Qualified human operators must oversee AI decisions, especially in critical contexts such as hiring, lending, healthcare, or legal services, ensuring that the system’s recommendations can be reviewed, challenged, or overridden if necessary.

By establishing these requirements, the EU aims to mitigate risks while allowing innovation to continue responsibly. The framework ensures that high-risk AI systems can deliver their benefits, like efficiency, predictive insights, and improved decision-making, without compromising safety, ethics, or fundamental rights.

Early Implementation in 2025

In 2025, the European Union began enforcing the first provisions of the AI Act. These initial measures laid the legal foundation, clarified definitions, and prepared both regulators and businesses for future enforcement. The more demanding rules, particularly those affecting high-risk AI systems, were scheduled for later stages, prompting companies to start planning for compliance while grappling with concerns over costs, complexity, and operational feasibility.

Why EU Policymakers Are Reassessing AI Rules for 2026

As Europe looks ahead to 2026, policymakers face a pivotal question: Can the EU regulate AI robustly without undermining innovation and economic competitiveness?

Some governments and business groups have raised concerns that current rules may:

• Be overly complex and difficult to implement

• Require extensive time for compliance

• Increase operational and legal costs

• Encourage AI development to move outside Europe

These challenges are part of a broader debate about the future of European digital regulation, which affects AI, data governance, cybersecurity, and privacy frameworks simultaneously.

What Are the Other Major EU Technology Laws in Flux?

Several key digital laws interact with the AI Act, each under review:

• General Data Protection Regulation (GDPR): Governs personal data collection and usage. AI systems rely on large datasets, yet current GDPR rules often limit training data availability. Proposed adjustments may allow greater data access for AI innovation while raising privacy concerns.

• e-Privacy Directive: Regulates digital communications, cookies, and online tracking. Overlap with GDPR has caused compliance challenges; reforms could simplify obligations.

• EU Data Act: Focused on access, sharing, and reuse of data, particularly in industrial contexts. Changes here may facilitate AI data pipelines for businesses.

• Cybersecurity Reporting Requirements: Existing rules protect digital infrastructure but are complex. Reforms aim to reduce duplication, simplify reporting, and lessen administrative burdens.

The Digital Omnibus Law: Streamlining Multiple Regulations

To address these challenges, the European Commission introduced a digital omnibus law, a single legislative package that coordinates changes across multiple regulations. This proposal seeks to:

• Delay certain AI enforcement deadlines

• Simplify compliance for companies

• Increase access to data for AI systems

• Reduce cybersecurity reporting obligations

The objective is to create a more flexible, coherent, and manageable European digital regulatory framework, balancing safety, innovation, and economic competitiveness.

Support and Opposition: The Debate Intensifies

Supporters argue that Europe must remain competitive in the global AI economy. EU leaders highlight that AI will be a cornerstone of future productivity and innovation. Proponents claim that:

• Streamlined rules will foster AI research and startups

• Compliance costs will decrease, leveling the playing field for smaller companies

• Flexible regulations attract investment and encourage innovation

Conversely, civil society organizations and privacy advocates warn that easing regulations risks undermining fundamental rights. Critics argue that:

• Privacy protections may weaken

• Accountability of AI systems could diminish

• Legal uncertainty may increase

• Large corporations could benefit disproportionately

127 NGOs signed an open letter describing the reforms as potentially the largest rollback of digital rights in EU history, emphasizing the importance of maintaining safeguards for individuals and smaller entities.

Legal Uncertainty and Public Trust

Frequent regulatory changes can create confusion for businesses, citizens, and regulators alike. Uncertainty about rights and obligations can:

• Erode public trust in AI technologies

• Complicate compliance efforts

• Lead to uneven enforcement across the EU

Global Implications of European AI Regulation

EU laws often set global standards, influencing AI governance worldwide. Changes in Europe could:

• Shape international AI regulations

• Affect global businesses operating in the EU

• Set precedents for privacy, safety, and accountability in AI systems

What Businesses and Individuals Should Watch in 2026

Key developments to monitor include:

• Final enforcement dates for high-risk AI systems

• Updates to data usage and privacy frameworks

• Implementation of accountability and transparency requirements

• Measures safeguarding individual rights

These decisions will have long-lasting effects on AI deployment, shaping how technology interacts with society for years to come. In short, Europe faces a delicate balance:

• Protecting citizens’ rights and digital freedoms

• Supporting innovation and economic growth

The choices made now will define Europe’s role as a global leader in responsible AI development. AI regulation is entering a decisive phase, and its consequences will be profound.

In this critical moment, it is essential for businesses, policymakers, and citizens to stay informed, engage in dialogue, and plan proactively.

.png)